A Slightly Biased Short History of Geostatistics

Geostatistics has deep roots. Some of the technical results date back to Kolmogorov and Wiener’s independent work on time series in the 1930s and 1940s, but the subject really began to evolve around the disciplines of mining, meteorology and forestry in the 1950s and 1960s. The most consistent development was in the School of Mines in Paris, where a young researcher, Georges Matheron, became interested by the problem of estimating gold reserves in South Africa.

An engineer, Danie Krige had noted that the grades of mining block was much less variable than the grades of the core samples used to estimate them and used a regression technique to account for this Support Effect. Matheron analyzed, formalized, and generalized these results to a general-purpose spatial estimation tool with built in Support Effect management. He named this kriging in honor of the South African who had motivated his research. He went on to found the Ecole Des Mines’ famous Centre of Geostatistics and Mathematical Morphology in Fontainebleau, where his early students went on to do great things of their own in geostats, morphology, and even in pure math. The roll call is very long, but to mention Andre Journel, Jean Serra, and Jean-Paul Chiles gives a flavor.

Matheron was something of a genius, though a slightly reclusive one. He wrote hundreds of papers, sometimes practical, sometimes very theoretical, but he published almost none of them. He numbered them and put them into the center’s library, so to find out the latest, you had to go there. It would be difficult to give a full account of his research, but suffice it to say that most of the tools used in contemporary Geostatistics still bear Matheron’s mark. Object modelling (1975), Gaussian simulation (1972), Truncated Gaussian (1988), as well as lesser known things, such as his results on upscaling and the first proof that Darcy’s law derives from the Navier-Stokes equations, are just a glimpse at some of the more applied results he produced.

Matheron wasn’t always the best at referencing the work of others, for which he received criticism in a world that increasingly rewards a type of academic conformity. There is an argument that he simply re-derived things when needed. For the math geeks amongst us, suffice it to say that when he was working on his theory of random sets (which led to object models), he independently re-derived Choquet’s Capacity theory.

I was lucky enough to study at the center in the mid to late 1980s. One source of terror was Matheron’s exams, which you needed to pass to get onto the PhD program. I remember that the median score in the exams was about 7.5% with a mean of about 20%, so the results followed a long tailed (possibly lognormal) distribution. I guess there was some kind of internal geostatistical joke going on there.

It was in the late 1980s to early 1990s period that the oil industry started to seriously take up geostatistics, initially in the research and development departments. Andre Journel started the SCRF group in Stanford University, which did so much to popularize the technology and his freeware GSLIB code was developed over this period.

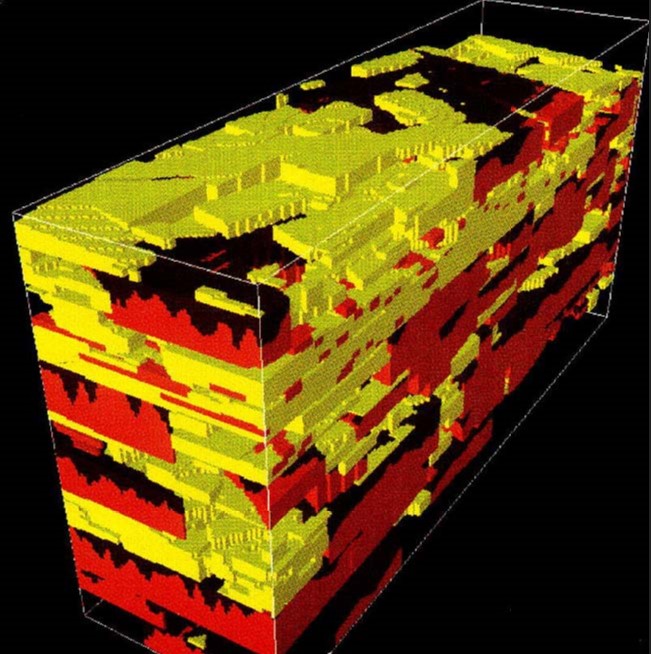

One of his earlier students, Georges Verly was working on developing the first commercial quality SIS algorithm at BP. Those ideas eventually drifted back to GSLIB. He managed to recruit me to the industry with tales of glamour and riches, which I’m still waiting for. It was an exciting time, with most of the majors having strong geostats groups doing lots of really interesting work. At that time, we often wrote bespoke geostats code and imported the result into a visualization tool like Stratamodel (fig 2). Only a fraction of the ideas from that time have made it to commercial code, though no doubt more should have. There were all kinds of start-ups developing geostatistics and structural modeling packages, some better than others. For me, one of the big commercial breakthroughs happened in the mid-1990s, when a Norwegian company, Geomatic, brought out RMS, the first software tool to integrate structural modeling, geostatistics, and upscaling. It started to make some small inroads into selling the technology to end users instead of modeling being done by small specialized teams. After a merger and a change of ownership, Geomatic became Smedvig Technologies and eventually Roxar, leading some of the original developers to leave.

Around this time, I joined Roxar as R&D work was drying up in BP and I wasn’t too interested in becoming a reservoir engineer in Aberdeen. By 2000, I was sitting as an employee representative on the Roxar board, so I know that at that time the total value of the reservoir modeling market was only about 30 million dollars per year. The vast majority of that was earned by Roxar, but those few who had left after the merger had started a little company that was beginning to make some waves with a product called Petrel.

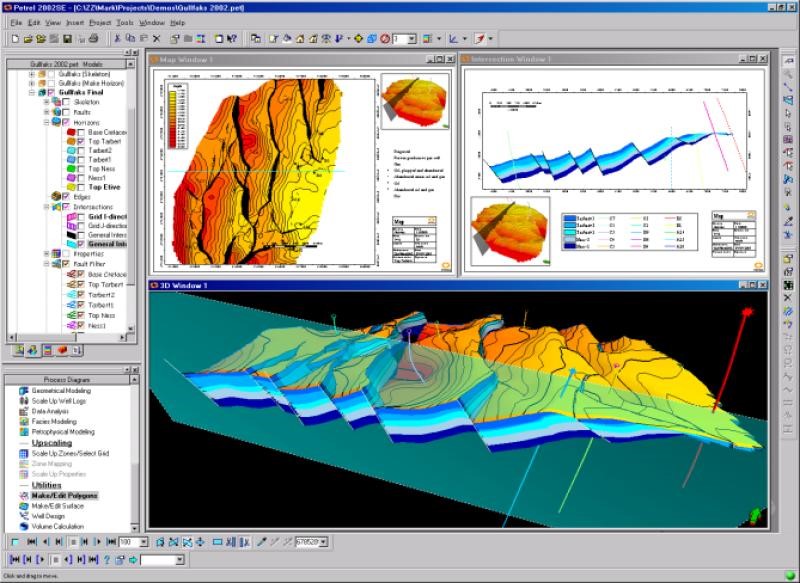

Petrel, like Geomatic before it, really focused on usability. Arguably it was initially not as good a technical solution as RMS for geostatistics where it just used GSLIB and a simple object model tool, but being a ‘second time around’ product, the team really nailed the delivery. It looked great, did the basics like loading data and constructing a simple structural model very well and had an intuitive user interface. They even made a vague attempt at dealing with seismic data—not terribly powerful initially, but it made a big statement about how things should go.

Schlumberger took an interest and became the first major to offer a credible reservoir modeling solution when they purchased Petrel in 2003. This led to an explosion in growth as suddenly this niche idea became available to the mainstream.

Geostatistics is now used routinely in the construction of reservoir models across the world. The toolkit in Petrel has been continually developed since 2003 with significant algorithm development and many new features. The Petrel E&P software platform is a considerably well-established product these days, and reservoir modeling, both structural and property modeling, can be proud to have been at the core of this growth.

Where next? Well, I think machine learning has a lot to offer to reservoir modeling, but it is important that we keep the gains that were so hard fought. We must condition to data better, to focus on STOOIP and connectivity for reserves.

Most of all, we must remember that models are built for a purpose, be it well planning or flow simulation. Algorithms developed, or models constructed, without the end point in mind are not really fit for purpose. Schlumberger has got the people, from algorithm developers to geoscience and reservoir engineering experts, to guide us. By working as a team, we should be able to keep our noses in front for some time to come.

Author information: Colin studied pure mathematics in Trinity College Dublin and did a PhD in Geostatistics at the Ecole des Mines in Paris. He joined BP in 1991 and did a spell in Roxar before joining Schlumberger in 2006.

Despite having done modeling work most of his adult life, he still enjoys it and will happily bore you to tears talking about it over a coffee, or even worse, over a beer. After years working in portfolio working to keep customers happy, he decided that it was time to get back to playing around with algorithms. He is currently working on an algorithm that merges machine learning and geostats, with the hope that it is so easy to use that customers are happy. Outside of work, he is chair of the Vortex Jazz Club, which was recently voted seventh best in the world, so is now dedicated to beating at least six other jazz clubs. If you are in London, do drop by and say hello—we need the audience. He also co-leads a team of 30 associate hospital managers for the Central and North West London Mental Health Trust.